genRetinotopyMaps

Creates azimuth and elevation maps from retinotopic mapping recordings.

Description

This function generates the amplitude and phase components of the azimuth and elevation retinotopic maps. The maps are created from the cortical responses to a periodic stimulus [1-4].

Input

This function accepts only image time series as input with dimensions Y, X and T. The input data must contain the cortical responses to at least two opposite directions in order to calculate either the azimuth or elevation maps. The directions of the visual stimuli are encoded in degrees where the cardinal directions left-right, bottom-up, right-left and top-down correspond to 0°, 90°, 180° and 270°, respectively. This information should be stored in a events.mat file located in the same directory of the input data.

The algorithm

For each direction, the fourier transform is performed in the time domain and the first harmonic (i.e. at the stimulation frequency) is selected to calculate the amplitude and phase maps. The directions 0° and 180° are combined to create the azimuth map while the directions 90° and 270° are combined to create the elevation map. The amplitude of opposign directions are averaged while the phases are subtracted in order to remove the response phase delay as proposed by Kalatsky and Stryker [1].

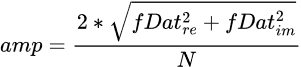

The calculation of the amplitude and phase maps for each cardinal direction was performed as in [4]. Given the Fast-Fourier Transformed data fDat(x,y,ξ) with the first harmonic frequency ξ, the amplitude is calculated as:

where the re and im are the real and imaginary components of fDat and N is the size of the frequency dimensions of fDat.

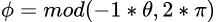

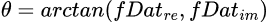

The phase map φ is calculated as:

where the angle θ is the four-quadrant inverse tangent of fDat(,x,y,ξ):

Output

The genRetinotopyMaps function creates the following .dat files:

- AzimuthMap

- ElevationMap

Each file contains the amplitude and phase Y,X maps. If the phase map is not rescaled to visual angle, the phase values will range between 0 and 2π. If the screen sizes and visual distance are provided, the ouput will be rescaled to match the visual angle in degrees (see below for the necessary criteria). In this case, the visual angle origin (0,0) will be placed at the center of the screen.

In order to rescale the phase map values to location units in the visual space (i.e. visual angle), the following criteria should be met (see [5] for more details):

- The optic axis of the eye should be perpendicular to the screen surface.

- The optic axis of the eye should point to the center of the screen.

- The full extent of the screen must be visible to the animal point of view.

Parameters

The parameters of this function are the following:

Number of times that the visual stimulus was presented. This value is used to select the first harmonic.

This is used in [4] to calculate the azimuth and elevation maps. Set this parameter to true to preprocess each direction movie as the average of each trial normalized by the median of an inter-trial period. To make this work, the trigger timestamps of each trial must be stored in the events.mat file and an inter-trial time period between trial must exist.

If the average movie is used, the value of the nSweeps parameter is set to 1 sweep, given that each trial represents a single sweep of the visual stimulus.

This corresponds to the distance in centimeters between the animal's eye and the screen. This measure is used to rescale the phase maps to visual angle in degrees. If set to zero, no rescaling is applied.

This corresponds to the horizontal length of the screen in centimeters. This measure is used to rescale the phase maps to visual angle in degrees. If set to zero, no rescaling is applied.

This corresponds to the vertical length of the screen in centimeters. This measure is used to rescale the phase maps to visual angle in degrees. If set to zero, no rescaling is applied.

References

- Kalatsky, Valery A., and Michael P. Stryker. 2003. ‘New Paradigm for Optical Imaging’. Neuron 38 (4): 529–45. https://doi.org/10.1016/S0896-6273(03)00286-1.

- Marshel, James H., Marina E. Garrett, Ian Nauhaus, and Edward M. Callaway. 2011. ‘Functional Specialization of Seven Mouse Visual Cortical Areas’. Neuron 72 (6): 1040–54. https://doi.org/10.1016/j.neuron.2011.12.004.

- Garrett, Marina E., Ian Nauhaus, James H. Marshel, and Edward M. Callaway. 2014. ‘Topography and Areal Organization of Mouse Visual Cortex’. The Journal of Neuroscience 34 (37): 12587–600. https://doi.org/10.1523/JNEUROSCI.1124-14.2014.

- Zhuang, Jun, Lydia Ng, Derric Williams, Matthew Valley, Yang Li, Marina Garrett, and Jack Waters. 2017. ‘An Extended Retinotopic Map of Mouse Cortex’. Edited by David Kleinfeld. ELife 6 (January): e18372. https://doi.org/10.7554/eLife.18372.

- Juavinett, Ashley L., Ian Nauhaus, Marina E. Garrett, Jun Zhuang, and Edward M. Callaway. 2017. ‘Automated Identification of Mouse Visual Areas with Intrinsic Signal Imaging’. Nature Protocols 12 (1): 32–43. https://doi.org/10.1038/nprot.2016.158.